Note

Click here to download the full example code

2.1.1. Analysis of a single session, single subject fMRI dataset¶

In this tutorial, we compare the fMRI signal during periods of auditory stimulation versus periods of rest, using a General Linear Model (GLM).

The dataset comes from an experiment conducted at the FIL by Geriant Rees under the direction of Karl Friston. It is provided by FIL methods group which develops the SPM software.

According to SPM documentation, 96 scans were acquired (repetition time TR=7s) in one session. The paradigm consisted of alternating periods of stimulation and rest, lasting 42s each (that is, for 6 scans). The sesssion started with a rest block.

Auditory stimulation consisted of bi-syllabic words presented binaurally at a

rate of 60 per minute. The functional data starts at scan number 4, that is the

image file fM00223_004.

The whole brain BOLD/EPI images were acquired on a 2T Siemens MAGNETOM Vision system. Each scan consisted of 64 contiguous slices (64x64x64 3mm x 3mm x 3mm voxels). Acquisition of one scan took 6.05s, with the scan to scan repeat time (TR) set arbitrarily to 7s.

The analyse described here is performed in the native space, directly on the original EPI scans without any spatial or temporal preprocessing. (More sensitive results would likely be obtained on the corrected, spatially normalized and smoothed images).

To run this example, you must launch IPython via ipython

--matplotlib in a terminal, or use jupyter-notebook.

Contents

2.1.1.1. Retrieving the data¶

Note

In this tutorial, we load the data using a data downloading

function. To input your own data, you will need to provide

a list of paths to your own files in the subject_data variable.

These should abide to the Brain Imaging Data Structure (BIDS)

organization.

from nistats.datasets import fetch_spm_auditory

subject_data = fetch_spm_auditory()

print(subject_data.func) # print the list of names of functional images

Out:

['/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_004.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_005.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_006.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_007.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_008.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_009.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_010.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_011.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_012.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_013.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_014.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_015.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_016.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_017.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_018.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_019.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_020.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_021.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_022.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_023.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_024.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_025.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_026.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_027.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_028.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_029.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_030.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_031.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_032.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_033.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_034.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_035.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_036.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_037.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_038.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_039.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_040.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_041.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_042.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_043.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_044.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_045.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_046.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_047.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_048.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_049.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_050.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_051.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_052.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_053.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_054.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_055.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_056.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_057.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_058.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_059.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_060.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_061.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_062.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_063.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_064.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_065.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_066.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_067.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_068.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_069.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_070.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_071.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_072.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_073.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_074.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_075.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_076.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_077.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_078.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_079.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_080.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_081.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_082.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_083.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_084.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_085.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_086.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_087.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_088.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_089.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_090.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_091.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_092.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_093.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_094.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_095.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_096.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_097.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_098.img', '/home/kshitij/nilearn_data/spm_auditory/sub001/fM00223/fM00223_099.img']

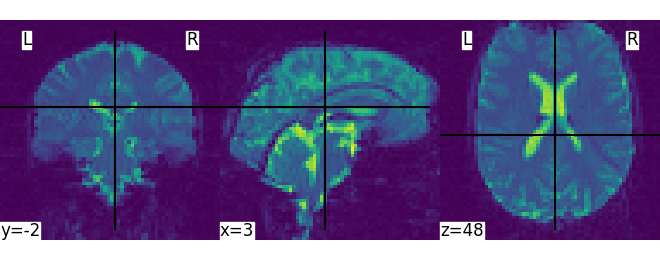

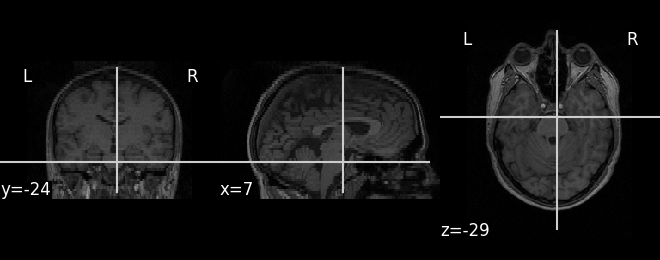

We can display the first functional image and the subject’s anatomy:

from nilearn.plotting import plot_stat_map, plot_anat, plot_img, show

plot_img(subject_data.func[0])

plot_anat(subject_data.anat)

Out:

<nilearn.plotting.displays.OrthoSlicer object at 0x7f6f142da390>

Next, we concatenate all the 3D EPI image into a single 4D image, then we average them in order to create a background image that will be used to display the activations:

from nilearn.image import concat_imgs, mean_img

fmri_img = concat_imgs(subject_data.func)

mean_img = mean_img(fmri_img)

2.1.1.2. Specifying the experimental paradigm¶

We must now provide a description of the experiment, that is, define the timing of the auditory stimulation and rest periods. This is typically provided in an events.tsv file. The path of this file is provided in the dataset.

import pandas as pd

events = pd.read_table(subject_data['events'])

print(events)

Out:

onset duration trial_type

0 0.0 42.0 rest

1 42.0 42.0 active

2 84.0 42.0 rest

3 126.0 42.0 active

4 168.0 42.0 rest

5 210.0 42.0 active

6 252.0 42.0 rest

7 294.0 42.0 active

8 336.0 42.0 rest

9 378.0 42.0 active

10 420.0 42.0 rest

11 462.0 42.0 active

12 504.0 42.0 rest

13 546.0 42.0 active

14 588.0 42.0 rest

15 630.0 42.0 active

2.1.1.3. Performing the GLM analysis¶

It is now time to create and estimate a FirstLevelModel object, that will generate the design matrix using the information provided by the events object.

from nistats.first_level_model import FirstLevelModel

Parameters of the first-level model

t_r=7(s) is the time of repetition of acquisitions

noise_model=’ar1’ specifies the noise covariance model: a lag-1 dependence

standardize=False means that we do not want to rescale the time series to mean 0, variance 1

hrf_model=’spm’ means that we rely on the SPM “canonical hrf” model (without time or dispersion derivatives)

drift_model=’cosine’ means that we model the signal drifts as slow oscillating time functions

high_pass=0.01(Hz) defines the cutoff frequency (inverse of the time period).

fmri_glm = FirstLevelModel(t_r=7,

noise_model='ar1',

standardize=False,

hrf_model='spm',

drift_model='cosine',

high_pass=.01)

Now that we have specified the model, we can run it on the fMRI image

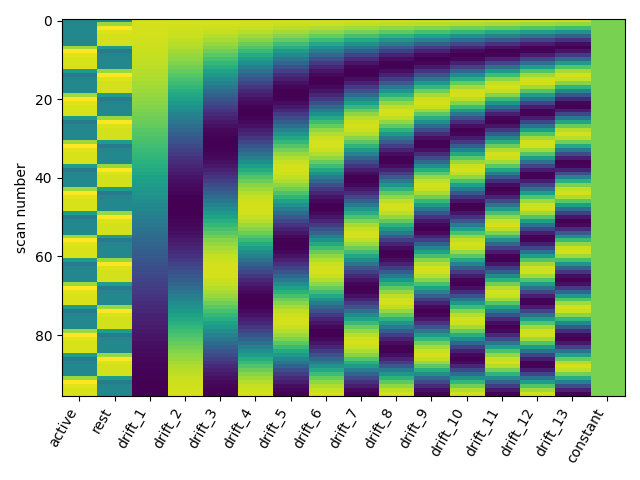

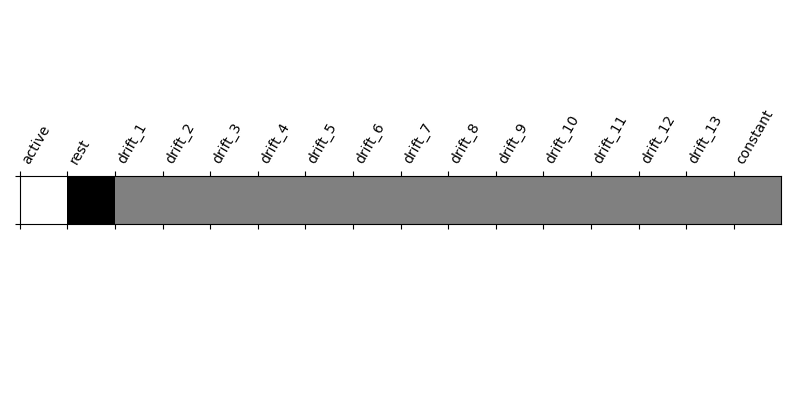

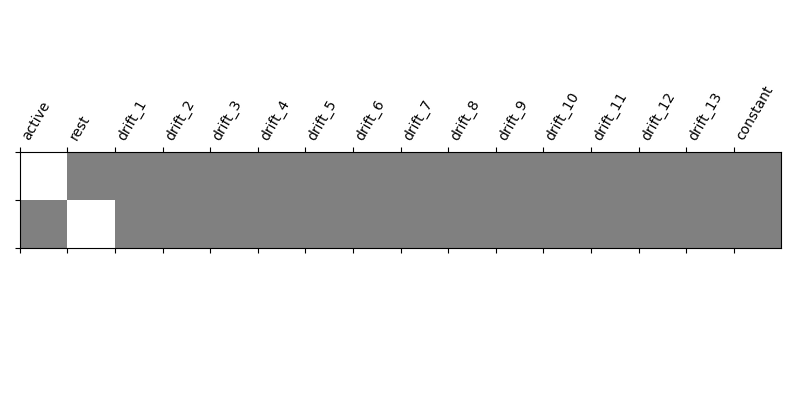

One can inspect the design matrix (rows represent time, and columns contain the predictors).

design_matrix = fmri_glm.design_matrices_[0]

Formally, we have taken the first design matrix, because the model is implictily meant to for multiple runs.

from nistats.reporting import plot_design_matrix

plot_design_matrix(design_matrix)

import matplotlib.pyplot as plt

plt.show()

Out:

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:116: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

Save the design matrix image to disk first create a directory where you want to write the images

import os

outdir = 'results'

if not os.path.exists(outdir):

os.mkdir(outdir)

from os.path import join

plot_design_matrix(

design_matrix, output_file=join(outdir, 'design_matrix.png'))

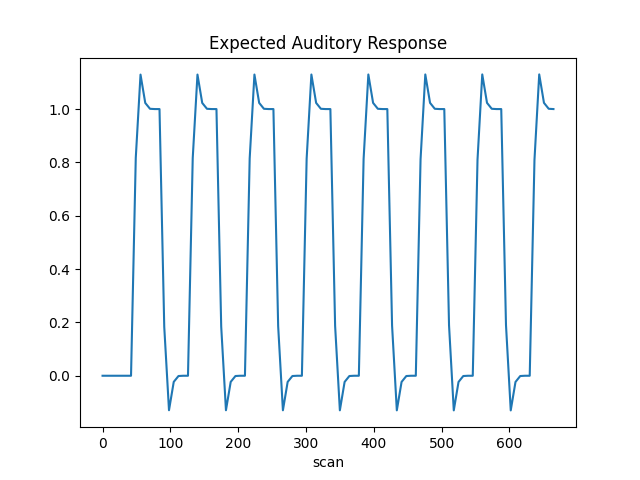

The first column contains the expected response profile of regions which are sensitive to the auditory stimulation. Let’s plot this first column

plt.plot(design_matrix['active'])

plt.xlabel('scan')

plt.title('Expected Auditory Response')

plt.show()

Out:

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:139: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

2.1.1.4. Detecting voxels with significant effects¶

To access the estimated coefficients (Betas of the GLM model), we created contrast with a single ‘1’ in each of the columns: The role of the contrast is to select some columns of the model –and potentially weight them– to study the associated statistics. So in a nutshell, a contrast is a weighted combination of the estimated effects. Here we can define canonical contrasts that just consider the two effects in isolation —let’s call them “conditions”— then a contrast that makes the difference between these conditions.

from numpy import array

conditions = {

'active': array([1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0.]),

'rest': array([0., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0.]),

}

We can then compare the two conditions ‘active’ and ‘rest’ by defining the corresponding contrast:

active_minus_rest = conditions['active'] - conditions['rest']

Let’s look at it: plot the coefficients of the contrast, indexed by the names of the columns of the design matrix.

from nistats.reporting import plot_contrast_matrix

plot_contrast_matrix(active_minus_rest, design_matrix=design_matrix)

Out:

<matplotlib.axes._subplots.AxesSubplot object at 0x7f6f13da6e10>

Below, we compute the estimated effect. It is in BOLD signal unit, but has no statistical guarantees, because it does not take into account the associated variance.

eff_map = fmri_glm.compute_contrast(active_minus_rest,

output_type='effect_size')

In order to get statistical significance, we form a t-statistic, and directly convert it into z-scale. The z-scale means that the values are scaled to match a standard Gaussian distribution (mean=0, variance=1), across voxels, if there were no effects in the data.

z_map = fmri_glm.compute_contrast(active_minus_rest,

output_type='z_score')

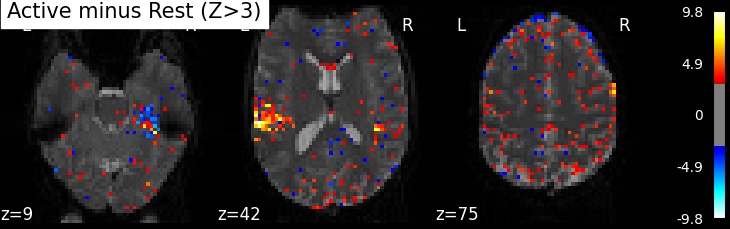

Plot thresholded z scores map.

We display it on top of the average functional image of the series (could be the anatomical image of the subject). We use arbitrarily a threshold of 3.0 in z-scale. We’ll see later how to use corrected thresholds. We will show 3 axial views, with display_mode=’z’ and cut_coords=3.

plot_stat_map(z_map, bg_img=mean_img, threshold=3.0,

display_mode='z', cut_coords=3, black_bg=True,

title='Active minus Rest (Z>3)')

plt.show()

Out:

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:204: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

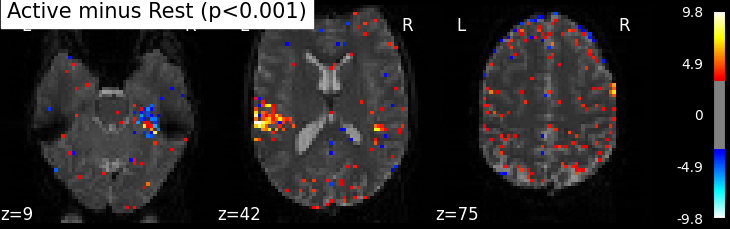

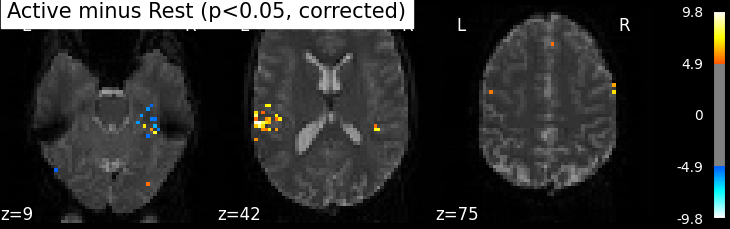

Statistical significance testing. One should worry about the statistical validity of the procedure: here we used an arbitrary threshold of 3.0 but the threshold should provide some guarantees on the risk of false detections (aka type-1 errors in statistics). One suggestion is to control the false positive rate (fpr, denoted by alpha) at a certain level, e.g. 0.001: this means that there is 0.1% chance of declaring an inactive voxel, active.

from nistats.thresholding import map_threshold

_, threshold = map_threshold(z_map, alpha=.001, height_control='fpr')

print('Uncorrected p<0.001 threshold: %.3f' % threshold)

plot_stat_map(z_map, bg_img=mean_img, threshold=threshold,

display_mode='z', cut_coords=3, black_bg=True,

title='Active minus Rest (p<0.001)')

plt.show()

Out:

Uncorrected p<0.001 threshold: 3.291

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:221: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

The problem is that with this you expect 0.001 * n_voxels to show up while they’re not active — tens to hundreds of voxels. A more conservative solution is to control the family wise error rate, i.e. the probability of making only one false detection, say at 5%. For that we use the so-called Bonferroni correction.

_, threshold = map_threshold(z_map, alpha=.05, height_control='bonferroni')

print('Bonferroni-corrected, p<0.05 threshold: %.3f' % threshold)

plot_stat_map(z_map, bg_img=mean_img, threshold=threshold,

display_mode='z', cut_coords=3, black_bg=True,

title='Active minus Rest (p<0.05, corrected)')

plt.show()

Out:

Bonferroni-corrected, p<0.05 threshold: 4.934

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:235: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

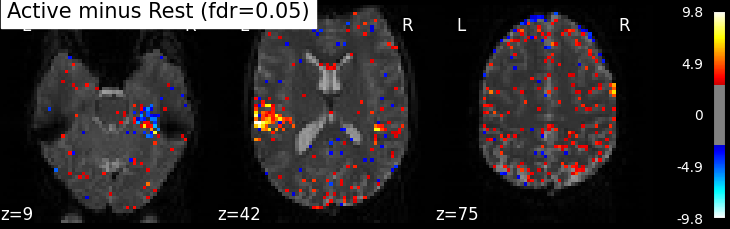

This is quite conservative indeed! A popular alternative is to control the expected proportion of false discoveries among detections. This is called the False discovery rate.

_, threshold = map_threshold(z_map, alpha=.05, height_control='fdr')

print('False Discovery rate = 0.05 threshold: %.3f' % threshold)

plot_stat_map(z_map, bg_img=mean_img, threshold=threshold,

display_mode='z', cut_coords=3, black_bg=True,

title='Active minus Rest (fdr=0.05)')

plt.show()

Out:

False Discovery rate = 0.05 threshold: 2.905

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:248: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

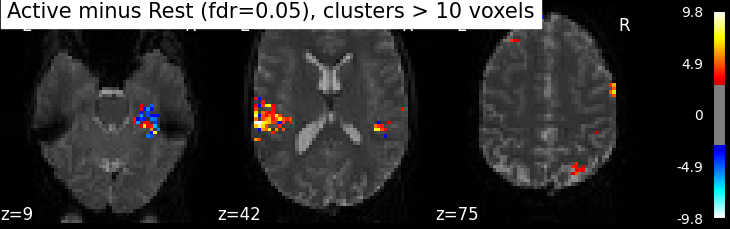

Finally people like to discard isolated voxels (aka “small clusters”) from these images. It is possible to generate a thresholded map with small clusters removed by providing a cluster_threshold argument. Here clusters smaller than 10 voxels will be discarded.

clean_map, threshold = map_threshold(

z_map, alpha=.05, height_control='fdr', cluster_threshold=10)

plot_stat_map(clean_map, bg_img=mean_img, threshold=threshold,

display_mode='z', cut_coords=3, black_bg=True,

title='Active minus Rest (fdr=0.05), clusters > 10 voxels')

plt.show()

Out:

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:262: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

We can save the effect and zscore maps to the disk.

We can furthermore extract and report the found positions in a table.

from nistats.reporting import get_clusters_table

table = get_clusters_table(z_map, stat_threshold=threshold,

cluster_threshold=20)

print(table)

Out:

Cluster ID X Y Z Peak Stat Cluster Size (mm3)

0 1 -60.0 -6.0 42.0 9.811979 4050

1 1a -63.0 6.0 36.0 8.601922

2 1b -63.0 0.0 42.0 8.399054

3 1c -48.0 -15.0 39.0 8.364058

4 2 60.0 0.0 36.0 9.605128 1512

5 2a 45.0 -12.0 42.0 7.590200

6 3 63.0 12.0 27.0 8.284500 972

7 3a 51.0 3.0 30.0 6.968355

8 3b 54.0 9.0 39.0 3.565609

9 4 36.0 -3.0 15.0 8.087451 1188

10 5 -63.0 -18.0 27.0 5.807510 594

11 5a -63.0 -21.0 42.0 5.646352

12 5b -60.0 -21.0 33.0 5.416271

13 6 45.0 -18.0 57.0 5.710963 702

14 6a 36.0 -12.0 57.0 5.633746

15 6b 30.0 -9.0 66.0 4.796135

16 6c 36.0 -15.0 69.0 4.254544

17 7 -12.0 -15.0 93.0 5.522477 621

18 7a -6.0 -15.0 99.0 4.713852

19 7b -3.0 -18.0 90.0 4.270733

20 7c -18.0 -12.0 96.0 4.085568

21 8 -24.0 -24.0 90.0 5.331806 648

22 8a -36.0 -24.0 90.0 4.700088

23 8b -12.0 -24.0 90.0 4.037845

24 8c -36.0 -24.0 84.0 3.527477

25 9 -15.0 -60.0 66.0 4.835099 837

26 9a -15.0 -60.0 57.0 4.615642

27 9b -6.0 -63.0 63.0 4.091568

This table can be saved for future use.

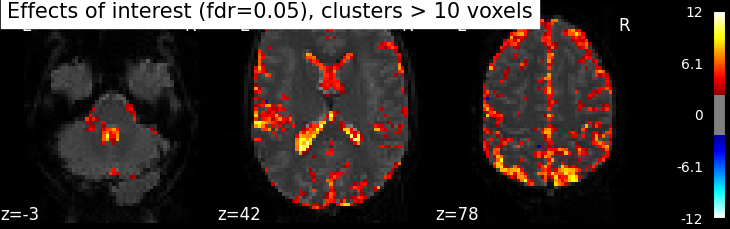

Performing an F-test.

“active vs rest” is a typical t test: condition versus baseline. Another popular type of test is an F test in which one seeks whether a certain combination of conditions (possibly two-, three- or higher-dimensional) explains a significant proportion of the signal. Here one might for instance test which voxels are well explained by the combination of the active and rest condition.

Specify the contrast and compute the corresponding map. Actually, the contrast specification is done exactly the same way as for t- contrasts.

import numpy as np

effects_of_interest = np.vstack((conditions['active'], conditions['rest']))

plot_contrast_matrix(effects_of_interest, design_matrix)

plt.show()

z_map = fmri_glm.compute_contrast(effects_of_interest,

output_type='z_score')

Out:

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:302: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

Note that the statistic has been converted to a z-variable, which makes it easier to represent it.

clean_map, threshold = map_threshold(

z_map, alpha=.05, height_control='fdr', cluster_threshold=10)

plot_stat_map(clean_map, bg_img=mean_img, threshold=threshold,

display_mode='z', cut_coords=3, black_bg=True,

title='Effects of interest (fdr=0.05), clusters > 10 voxels')

plt.show()

Out:

/home/kshitij/workspace/nistats-org/nistats-repo/nistats-kchawla-pi/examples/01_tutorials/plot_single_subject_single_run.py:317: UserWarning: Matplotlib is currently using agg, which is a non-GUI backend, so cannot show the figure.

plt.show()

Oops, there is a lot of non-neural signal in there (ventricles, arteries)…

Total running time of the script: ( 0 minutes 7.402 seconds)